Google algorithm updates are just like a trend that keeps on changing every now and then. Coping with these updates is quite a challenging task for SEO professionals. Understanding these updates demands intense research and a deep level of technical knowledge.

What Do Google Algorithms Mean?

In simple words, the Google algorithm is a system that retrieves data from the search index and delivers the best possible results to the users.

There are numerous factors taken into consideration by the Google algorithm when it comes to ranking a webpage and actually delivering the most relevant result to the users.

Google wasn’t too frequent with the algorithm updates as it is now. However, the latest advancements in technology and the way people started making search queries along with several other aspects forced the search engine to advance and introduce new updates frequently.

All Google Algorithm Updates

Let’s deep dive into all Google algorithm updates that Google has introduced in order to improve the user experience of searchers.

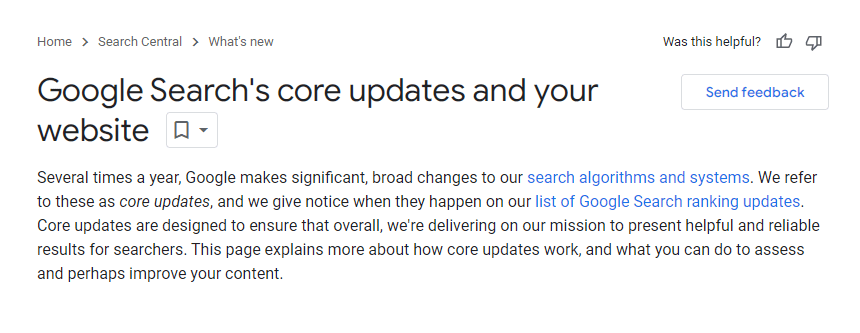

Florida

Google introduced the Florida update in 2003. Yes, it was two decades back, but the importance of this update still stands firm. The decade was then introduced with several updates, with Florida being the first and quite important.

Florida update was introduced in November 2003, just before Christmas which forced a lot of sites to change their SEO strategies, specifically, eCommerce sites as it was sale season.

What was the Florida Update?

The Florida update was all about reducing spam tactics like keyword stuffing. It was that time when black-hat SEO was dominant and due to this, a lot of innocent and actual value-delivering sites weren’t ranked and hence, weren’t noticed.

Google has never actually entirely revealed what the Florida update actually was, but the clues that were presented along with the results noticed, it was quite evident that it was all about degrading the website rankings of the sites following black-hat tactics.

With the Florida update, Google put more emphasis on the website that provided relevant content with regard to the search query of the user. It implies that the website matching the search query of the user was rewarded by the search engine. Also, Google introduced the concept of “nofollow” with the Florida update. Hence, with this update, digital marketers started specifying to Google what links it shouldn’t consider when it comes to ranking a site.

Another interesting concept that Google introduced in the Florida update is Latent Semantic Indexing (LSI). LSI helps Google understand the relationship between words and concepts more effectively, and hence, deliver the search results accordingly.

Changes Made to Google Algorithm

Florida update witnessed the introduction of a new algorithm to determine the relevance of the website, which was termed PageRank. The web pages with relevant & quality inbound links were considered authoritative, which imparted a positive impact on the search engine results.

A Sandbox system was designed that worked as a layer of security for the sites that were spammy and delivered low-quality content. These sites witnessed a considerable drop in rankings, and rightly so.

Then, the Hammer system was introduced to penalize the websites following tactics like keyword stuffing. The penalties ranged from reduced visibility to even removal of the website from the search engine results.

There were a number of changes made to Google’s algorithm with the motive of reducing black-hat SEO and making sure that only the sites practising legit SEO tactics and delivering some valuable content ranked in the search engine results.

Recovery from the Florida Update

Yes, the Florida update was introduced way back in 2003, but as mentioned, it’s still equally important. Your website must comply with this update as it largely shapes the website rankings, and hence, it can play a significant role in deciding your success.

Some basic steps you can follow to make your website comply with the Florida update are as follows.

Stay Away from the Keyword Stuffing

Keyword stuffing implies fitting keywords into the content even when they don’t fit. It is a spammy tactic that Google hates. It’s no longer difficult for the search engine to recognize the keyword stuffing and penalize the websites for it. Use keywords naturally in your content and let the search engine do its job in terms of crawling the content and ranking it.

Don’t Use Irrelevant Links

Google Florida update also focused on link analysis. Previously, recognizing irrelevant & spammy links was difficult for search engines, which got easier after this update. Hence, make sure not to use any hidden or irrelevant links in your content, as it is a form of spam and breaches the Florida update rules. Use the “nofollow” link concept effectively.

Be Right with the Website Structure

From heading to website URLs to even navigation, after the Florida update, it’s absolutely necessary for the website owners to be right with the website structure. Website structure largely shapes the user experience on a website, and hence, the Florida update has this aspect on its checklist. Gone are the days when a website used to succeed with a haphazard structure. Now, you need to be a bit more logical with the way you structure your website.

Write High-Quality Content

Be it 2003 or 2023, the importance of content stays the same. Yes, it has increased after the introduction of the Florida update. Hence, the content on your website has to be crisp, clear, and non-spammy and deliver the values that your visitors deserve. The relevant content on your website will provide a top-notch user experience and hence, help your website to nail the Florida update considerably.

Image Source: Google

Jagger

After Florida, Google introduced a number of minor tweaks & updates, but the Jagger update was a major one that came into existence in October 2005. It all started just a few weeks ago, in September and the update was confirmed entirely in October.

What was the Jagger Update?

Jagger update covered a number of SEO aspects like a website’s backlink profile, content size & quality, and numerous other technical & non-technical aspects.

The Jagger update made the search engine put more emphasis on factors like content quality, domain authority, link quality, etc.

Google was committed to providing better search results to the audience, and the Jagger update was a clear testament to it.

Factors Affected by the Jagger Update

As mentioned, various factors were affected by Google’s Jagger update. Let’s have a look at them below.

- Link Quality: The Jagger update implied that Google started putting more emphasis on link quality. Sites with high-quality authoritative links were rewarded with improvised rankings, while the sites with low-quality backlinks were penalized with degradation in the rankings. Here, high-quality links were considered the signs of trustworthiness and authority, while bad links worked as a major red flag for the search engine.

- Website Authority: The Jagger update took the website authority factor to a whole new level. The website with relevant & quality content, a decent reputation, and an amazing engagement rate was rewarded with improved rankings.

- Duplicate Content: Before the Jagger update, sites with duplicate content weren’t noticed much, and eventually they didn’t face much difficulty getting ranked. However, after this update, Google degraded the rankings of the sites with duplicate content. This was a massive step in terms of making the domains improve their content and provide users with what they deserve.

The Jagger update witnessed a considerable fall in the rankings of numerous websites. This was Google’s effort to subside manipulative links & scrap content, and it succeeded to a great extent.

Image Source: Google

The Recovery

Yes, the Jagger update demanded alterations in multiple variables, but none of them were complex or difficult to deal with. Some reported recovery steps for this update were:

- Improving overall website structure and architecture.

- Removing internal & external links that aren’t relevant or valuable for the website visitors.

- Removing the duplicate & scrape content from the website completely.

- Removing low-quality backlinks and making sure that no spammy tactics are followed to get more backlinks.

- Writing unique content and providing more value to the visitors with the content.

The Jagger update was the start of today’s SEO best practices. Yes, Google wasn’t that dominant in the year 2005 with the search engines like Yahoo and Internet Explorer still active, but it still changed the SEO strategy for numerous businesses all over the world, and all for the right reasons.

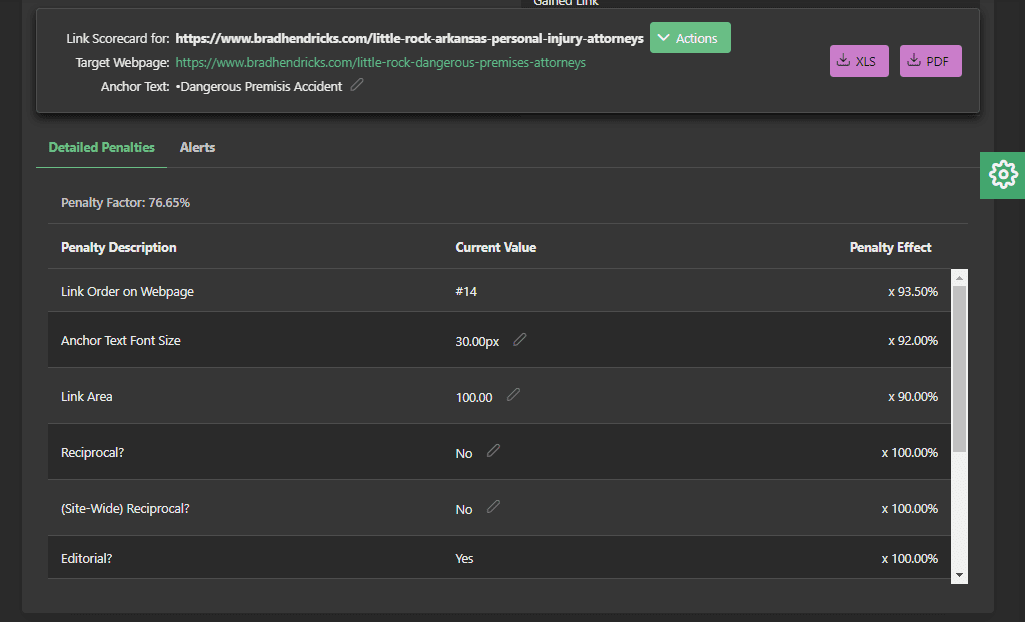

Big Daddy

It was in November 2005 that Google talked openly about the Big Daddy update. However, the entire update was completed in March 2006. It was an infrastructure update, and the update imparted a significant impact on the search engine results pages (SERPs).

What was the Big Daddy Update?

The Big Daddy update was primarily a crawl update that focused on the trustability of the inbound & outbound links of the site. If the site didn’t score well in terms of trustability, not many pages of that specific site were indexed. If a site already had all the pages indexed, and if it didn’t score well in terms of trustability, many pages of it were removed.

Crucial Factors of Big Daddy Google Algorithm Update

As mentioned, the Big Daddy update played a crucial role in altering the SERPs and all for good. The primary focus was to identify sites with poor quality inbound & outbound links and penalize them. Some other key factors related to this massive update are:

- Indexing Mechanism: Big Daddy update implied various advancements in Google’s indexing mechanism that helped Google ensure that important and innocent web pages were indexed rather than the web pages with poor quality inbound & outbound links.

- Canonicalization: A website having more than one URL displaying the same content is termed canonicalization. This update helped the search engine to ensure that the canonical version of a webpage was optimally indexed and ranked, and web pages with duplicate content were penalized and ignored.

- Content Duplicacy: This was one aspect that troubled Google a lot. With each of its updates, Google has tried to subside the issue as much as possible. Big Daddy was another effort by the search engine that helped it handle the duplicate content with a bit more efficiency, and hence, penalize the domain appropriately.

- Handling URL Parameters: Handling long & unnecessary URL parameters was made a bit easier for Google by the Big Daddy update. It helped the search engine with more accurate indexing, and hence, better overall results.

The Recovery

Recovery from this Google algorithm update demanded a new approach, a new strategy, and certain changes to the linking strategy of the sites. Some of the best practices that brands followed to recover from this update are:

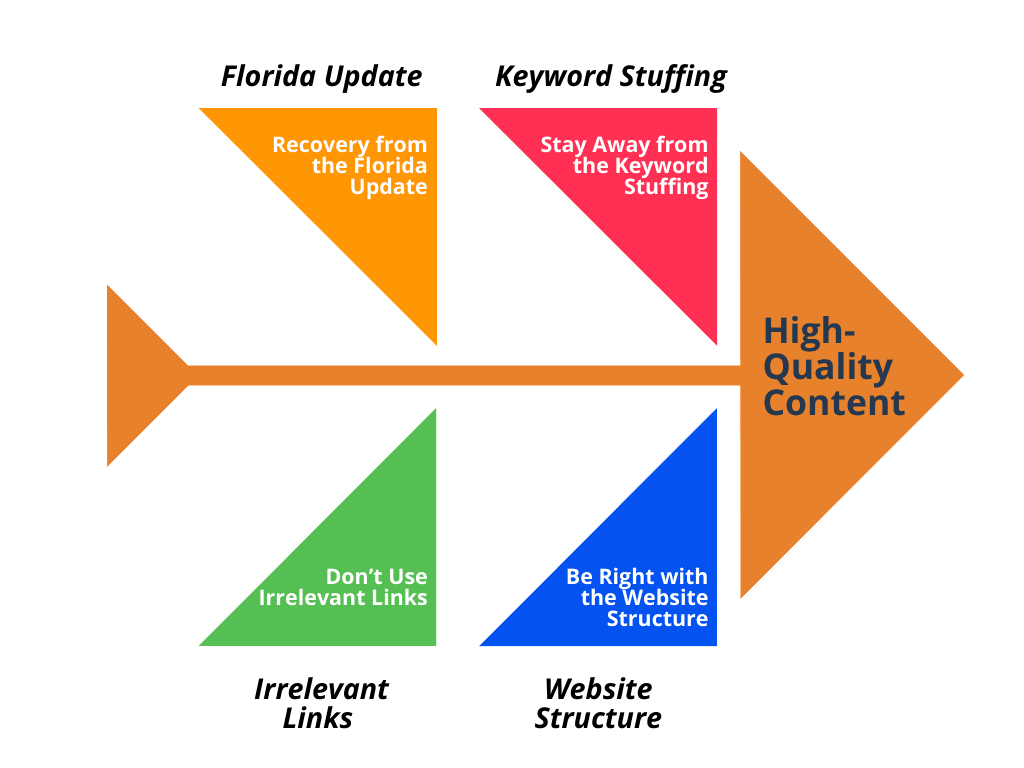

- SEO Audit: SEO audit helped the website owners to analyze the links on their website along with the URL structure, and other several crucial aspects that might play a role in terms of getting the web pages indexed. It helped them to recognize the potential SEO flaws and overcome them.

- Content Audit: Content audit was another comprehensive thing that helped website owners cope with the Big Daddy update. With a content audit, the website owners were able to recognize duplicate & low-quality content on their website and hence, fix the same. Writing high-quality content on their website shielded their website from the Big Daddy update to a considerable extent.

- Checking Backlinks & Getting Rid of Spammy Ones: As mentioned, this update primarily focused on the low-quality & spammy inbound & outbound links on a website. Hence, it was necessary for the website owners to get the backlinks checked, and focus on high-quality links rather than going with spammy tactics to gain more backlinks.

- Improving the Overall Website Structure: Enhancing the overall website architecture and providing easy navigation to the users was quite important after this update. It was all about providing user-friendly URLs, relevant links, and a clean menu.

Phases of the Big Daddy Update

It took around 4 months for Google to launch the Big Daddy update completely. Hence, it is clear that it was launched in phases, with the next phase improvising the previous phase. Let’s have a look at the different phases in which this update was launched.

November 2005: It was the initial phase of this update. Website owners started noticing fluctuations in website rankings.

December 2005: Google continued to work on the Big Daddy update. Indexing & crawling issues were reported. Google primarily focused on the factors like duplicate content & URL handling.

January 2006: The accuracy of the search engine results was compromised due to this update. Hence, in this phase, Google worked on it and the update was now focused on improving the accuracy of the search engine results along with several other indexing issues.

March 2006: This was the phase when the Big Daddy update got a bit stable and website owners started aligning with the update. Many more updates were introduced after the Big Daddy update and this update worked as a foundation for them.

Big Daddy update was probably one of the biggest efforts from Google to enhance the search engine results and to reduce the spammy inbound & outbound links along with duplicate content. Yes, it took some time for the website owners to align with the update, but it was necessary.

Vince

Okay, enough of working on spammy backlinks, and duplicate content, for now! No, Google didn’t say that, but Vince update did. Vince update, also fondly termed as “Brand Update” actually did a lot of good to the brands.

The Vince update was launched in January 2009. Vince update could be considered a simple change, however, the impact that the update imparted was massive.

What was the Vince Update?

The exact update wasn’t disclosed by Google entirely, but the results noticed by SEO professionals largely gave a massive clarity on the same. Vince update primarily focused on improving the search engine rankings of a website by putting greater emphasis on factors like brand authority and brand trustworthiness.

After the Vince update, huge brands started appearing more than before for long-tail keywords. Of course, the trustworthiness and the domain authority were a couple of other factors that played a crucial role here.

Crucial Factors of the Vince Update

As mentioned, exact factors that imparted the impact on website rankings were never mentioned by Google, but as per the results noticed, some crucial factors that circled around this update are:

- Website Trustworthiness: Websites that gave the signal of credibility and trustworthiness were ranked. This is one reason why reputed brands with correct long-tail keywords benefitted in terms of website rankings.

- Domain Authority (DA): Before this update, the DA was one factor that website owners often neglected. Vince update did consider the DA of a website in terms of ranking it, and it was this point after which website owners started taking DA seriously and made efforts to boost it.

- On-Page & Off-Page Aspects: While the update was primarily focused on trustworthiness, it still considered factors like content quality & relevance, link strategy, etc. to see if the domain is actually trustworthy. Also, the off-page factors like social media presence were considered to see to what extent the website is trustable.

Recovery from Vince Update

As Google wasn’t entirely clear about the Vince update, recovering from it and making the site cope with it was difficult. However, based on the noticed results, some steps taken by the SEO community to deal with this update were:

- Improving Overall Website Quality: Optimizing the website structure efficiently in terms of navigation & design helped the website owners considerably. It made Google believe that the website is clean and doesn’t confuse the users.

- Optimizing On-Page & Off-Page SEO: It was necessary for the website owners to optimize both, on-page & off-page SEO to ensure that users face a smooth experience on their domain, and it gets easy for Google to trust the website & rank them. Strategies like using long-tail keywords efficiently, being right with the content, etc. were implemented by the website owners.

- Social Media Marketing: It started way before, but after the Vince update, the importance of social media marketing increased considerably. Website owners started leveraging social media platforms to advertise their brand and, hence, establish their reputation.

- Trying to Enhance User Engagement Rate: Website owners made efforts to reduce bounce rate, boost organic CTR, and provide a smooth user experience to increase the overall engagement rate. Enhancement in the user-engagement rate implied that it became a bit easier for Google to trust the websites and hence, rank them accordingly.

Yes, Vince update was a bit unfair as for Google, it was a bit difficult to trust the new domains even though they followed entirely legit tactics to lead the competition. However, the update played massively in the favor of popular brands and uplifted their rankings & visibility into the search engine results considerably.

Caffeine

It was in August 2009 that Google announced the Caffeine update. Caffeine became one of the most crucial Google algorithm updates, even when the present-day scenario is considered.

Yes, Google announced the update in August 2009, but it provided developer preview for months so that both SEO professionals & developers could pinpoint any issues regarding the update. It clearly reflects the fact that how massive the update was.

After a lot of feedback & amendments, the entire Caffeine update was rolled out in June 2010.

What was the Caffeine Update?

The Caffeine update was primarily focused on the indexing system. This update allowed the search engine to crawl and store data with much more accuracy than before. Before this update, Google used to crawl data based on freshness requirements, while after Caffeine Google collected data, crawled it, and indexed it within seconds. Hence, no fresh content was missing, which was a problem Google & website owners came across before the update.

What was the Need for the Caffeine Update?

Unlike any other update, the use of the Caffeine update wasn’t clear to many SEO professionals. After all, what’s the need to change the indexing system entirely?

Well, Google’s indexing system was designed & launched in the year 1998, while this update was launched in 2009. In 1998, there were close to 2.5 million websites online, while in 2009, there were around 240 million websites. Yes, the change was drastic, and Google’s indexing system was simply not capable of crawling & indexing all the data seamlessly.

A lot of crucial information was missed in terms of indexing and several issues were reported. Plus, the different dimensional use of visual and media indexing was a crucial issue here. Google understood it well over time, and hence, decided to advance its indexing system. As mentioned, advancement in the indexing meant no fresh information was missed and indexing happened within a few seconds.

Crucial Factors of the Caffeine Update

The Caffeine factor redefined how Google crawled, indexed & ranked the website data. Let’s check out some of the most important factors of the Caffeine update below.

- Indexing: This update allowed the mighty search engine to index website data in a few seconds, which not only enhanced the indexing efficiency but also made it faster to include the new content in the search results.

- Expanded Range: Indexing media-rich content was an issue that Google faced before this update was launched. After Caffeine, it was made easy for the search engine to index & crawl media-rich content. It included a website with rich graphics & even social media posts.

- Preference to Fresh Content: With the Caffeine update, it was clear that Google will prioritize fresh & relevant content in terms of indexing & ranking. Hence, it was now important for the website owners & SEO professionals to keep on updating the content on their website regularly.

- Independent Indexing of Subdomains: After Caffeine, the subdomains of a website were considered separate entities, and hence, they were ranked independently of the main domain.

Recovery from the Caffeine Update

So, how did the website owners recover from the massive Caffeine update? Let’s check out the answers.

- Technical SEO: Website owners started fixing issues like crawling errors, broken links on the website, use of incorrect metadata & heading tags, etc.

- Content Update: This update primarily emphasized ranking the website with quality content. Website owners now started taking the same seriously and started updating content with the latest & valuable data.

- Website Optimization: Overall website performance was a key thing here, and hence, website owners started optimizing the website structure. Also, the flaws in factors like website responsiveness, mobile optimization, etc. were recognized and fixed.

- Continual Monitoring of Website Indexing: The Caffeine update meant an entire change in Google’s indexing & crawling mechanism. Hence, it became mandatory for website owners to keep track of their website indexing and try to understand the exact pattern Google follows. It was necessary to recognize the changes they would be required to make to get their web pages indexed.

Caffeine update was a massive one, but given the number of websites, and the increasing popularity of the internet those days, it was absolutely a must. There was some chaos initially, but the website owners who played smart, studied the update well, and understood the importance of fresh content on the website didn’t face much trouble.

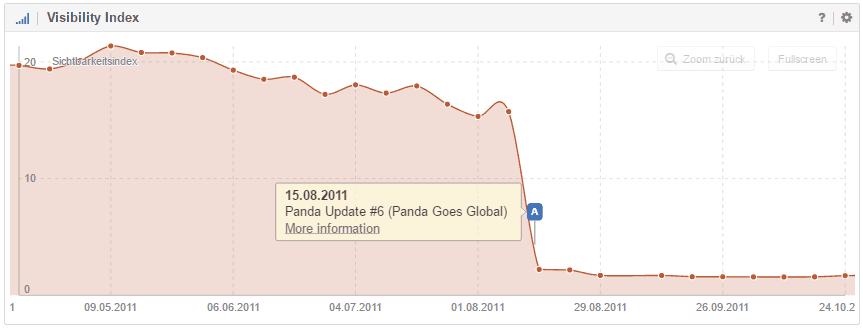

Panda

Panda update was launched in February 2011. This update was part of Google’s efforts to eradicate black-hat SEO tactics. This was the time when tactics like content farming, and spammy link gain tactics were predominant.

Initially known as “Farmer”, the Panda update was quite successful in terms of helping Google recognize low-quality websites and reward high-quality websites with better rankings.

What was the Panda Update?

The Panda update was primarily meant to reduce the rankings of websites with low-quality content and rank the websites that delivered valuable content. Also, the sites that presented in-depth stats, facts, and analysis were rewarded with improved rankings. According to the stats, around 12% of the search queries were affected by the Panda update.

Google didn’t disclose the exact aspects of this update but presented a series of questions that defined the factors the search engine is going to consider in terms of ranking a website. Let’s have a look at these questions below.

- Would you trust the information presented in this article?

- Is this article written by an expert or enthusiast who knows the topic well, or is it more shallow in nature?

- Does the site have duplicate, overlapping, or redundant articles on the same or similar topics with slightly different keyword variations?

- Would you be comfortable giving your credit card information to this site?

- Does this article have spelling, stylistic, or factual errors?

- Are the topics driven by the genuine interests of readers of the site, or does the site generate content by attempting to guess what might rank well in search engines?

- Does the article provide original content or information, original reporting, original research, or original analysis?

- Does the page provide substantial value when compared to other pages in search results?

- How much quality control is done on content?

- Does the article describe both sides of a story?

- Is the site a recognized authority on its topic?

- Is the content mass-produced by or outsourced to a large number of creators, or spread across a large network of sites, so that individual pages or sites don’t get as much attention or care?

- Was the article edited well, or does it appear sloppy or hastily produced?

- For a health-related query, would you trust information from this site?

- Would you recognize this site as an authoritative source when mentioned by name?

- Does this article provide a complete or comprehensive description of the topic?

- Does this article contain insightful analysis or interesting information that is beyond obvious?

- Is this the sort of page you’d want to bookmark, share with a friend, or recommend?

- Does this article have an excessive amount of ads that distract from or interfere with the main content?

- Would you expect to see this article in a printed magazine, encyclopedia or book?

- Are the articles short, unsubstantial, or otherwise lacking in helpful specifics?

- Are the pages produced with great care and attention to detail vs. less attention to detail?

- Would users complain when they see pages from this site?

As you can clearly see, all these questions were based on how authoritative the content of a website is and to what extent the website can be trusted. Plus, the extent to which the user experience is offered by the website was also covered in this series.

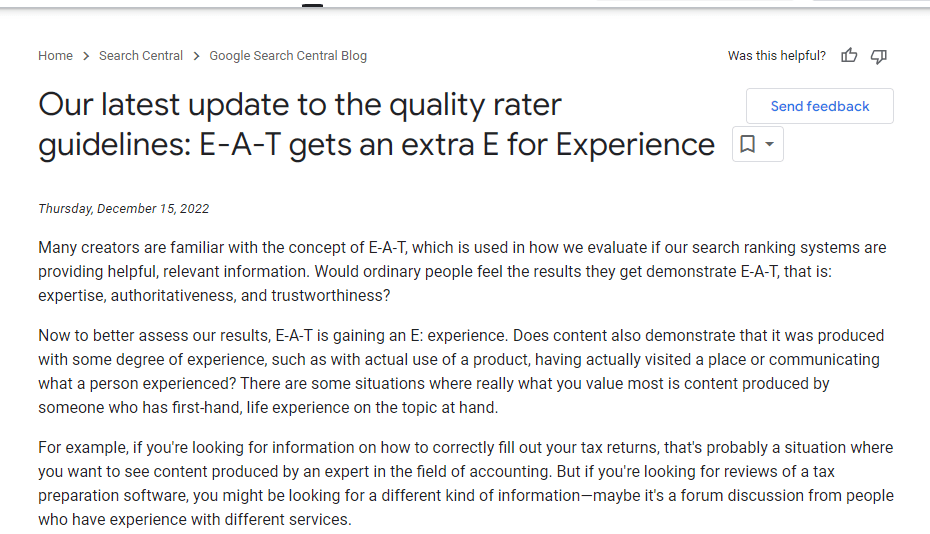

How was E-A-T Identical to the Panda Update?

Google introduced the E-A-T concept in the year 2014. E-A-T stands for Expertise, Authority, and Trustworthiness. The concept was closely related to the Panda update. How? Well, this concept primarily focused on:

- Avoiding thin & non-relevant content like the Panda update.

- Downgrading content that lacked information from authoritative sources. Panda update was too focused on the authority of the information that the website provides.

- Downgrading the website that incorporated too many ads and non-useful links. Panda update too included this factor as its focus point.

Ultimately, E-A-T was derived keeping the Panda update in mind. Not many sources mention that, but I would simply define E-A-T as an enhancement to the Panda update.

Image Source: Google

Recovery from Panda Update

Several domains were initially hit by the Panda update. However, several strategies were implemented by the website owners to recover from the same. Let’s check them out below.

- Improving the Website Content Grammatically & Adding More Relevancy to the Content: Well, the content was a primary factor that was in focus about the Panda update. The websites that had amazing content didn’t face much trouble, while the platforms with thin content suffered seriously.

Several sites had to change the entire content on their site, which meant a lot of effort & unwanted hassles for them. Not only the relevance, but the content grammar, tone, etc. mattered too.

- Abandoning Content Farm Practice: A lot of digital marketing agencies hired too many writers to write a lot of website text to establish trust among the algorithms. However, the Panda update forced them to abandon this practice entirely and focus only on quality rather than quantity.

The content that was posted usually incorporated factors like keyword stuffing. With the abandonment of this practice, the keyword stuffing was largely subsided, which was a great thing in terms of content readability.

- A Big No to Excessive Ads: Excessive ads did kill the SEO of a website. The Panda update questionnaire did incorporate a direct question on ads, which meant that the website with excessive ads suffered seriously in terms of website rankings. Hence, in order to recover from this update, the sites that had excessive ads reduced the number of ads considerably.

It was a no-brainer that excessive ads subsided the user experience, and this update was all meant to enhance it on any platform. Hence, the update took the factor of excessive ads seriously and made sure that user experience wasn’t hampered by any means.

- Using NoIndex & NoFollow Concept Wisely: Well, No Follow was always a useful concept, but website owners started using it a bit more after the introduction of the Panda update. It was nothing spam, but website owners simply clarified the links that they didn’t wish Google to follow. As Google didn’t follow & index the specified links, the chances of a website being considered spammy unnecessarily reduced considerably.

Panda Google algorithm update redefined the way in which the website had content to a good extent. This update was purely meant to downgrade the website rankings of the website with thin content and improve the website rankings of the website with strong content.

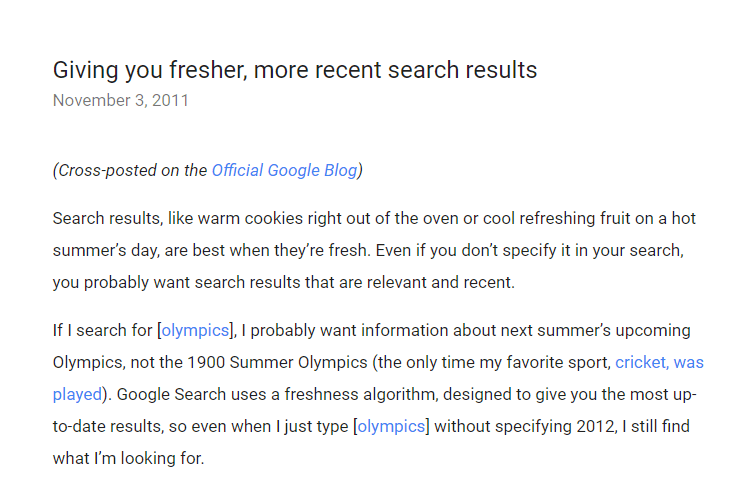

Freshness Algorithm

Google’s Freshness algorithm update was another significant advancement to its algorithm. It primarily focused on improving the search engine results and matching the results with the user’s query as much as possible.

The Freshness algorithm was introduced in November 2011. It impacted around 35% of search queries. This update brought a significant change to how the websites were ranked.

What was the Freshness Algorithm?

Google introduced the Freshness algorithm update to deliver fresh search results to search engine queries. The Caffeine update made it possible for the search engine to index web pages quickly, and the Freshness algorithm update was just a piece of that infrastructure.

Google’s Freshness algorithm was to recognize the aspect that not every content needs to be fresh. Let’s understand it with an example. An article with the topic “Who won the FIFA World Cup in 1930?” need not be updated. Yes, the website owner might consider the inclusion of small pieces of information here & there, but it’s absolutely not mandatory for this article to be updated.

On the other hand, an article with the topic “Happiest countries in the world” is required to be updated on a regular basis as the list keeps on changing every year. Plus, the content that was old & outdated, but ranked higher in search engine results made way for other websites that provided fresh and comparatively valuable content.

With the Freshness algorithm, Google classified queries into 3 types of time-related queries, which were:

- Current Events: Queries related to all the current happenings all over the world were included in this type.

- Continuously Updating Events: There are certain events that are updated at regular intervals. Think of Apple launching & upgrading iPhone devices at frequent intervals. They might or might not trend, but are updated at frequent intervals.

- Recurring Events: All the events like election results, TV shows, etc. were included in this time-related query.

Crucial Factors of the Freshness Algorithm Update

Let’s check out the important factors considered by the Freshness Algorithm update below.

- Frequency of the Updates & Changes: The sites that updated the content regularly & added new & relevant content on the website were considered fresh and were pulled upwards, and the sites with outdated content were downgraded.

- Content Type: There are various types of content. Each of these types was analyzed and ranked according to the freshness & relevance in various domains.

- Date of the Published Content: This was a crucial factor that this algorithm considered before ranking a website. The more the recency of the publication date was, the more were the chances of the content getting ranked. Of course, there were several other factors considered, but the publication date was a basic factor.

- Website Authority: Well, this was one factor that Google considered with every update. The website authority and the relevance of the content on a web page played a crucial role in terms of website rankings.

Recovery from the Freshness Algorithm Update

Let’s check out the steps that website owners took to recover from the Freshness algorithm update.

- Regular Content Updates: Adding new & valuable information to the existing blogs and articles was an effective way to recover from this update. As the name clearly implies, the sites delivering fresh & valuable content were ranked and the sites with outdated content paved the way for them.

- Writing Content on Trends & Latest Happenings: This was a useful way to improve website rankings as it worked as a clear signal of the fact that the website incorporates fresh content.

- Using Social Media: Promoting the content on social media, and encouraging the audience to share the content within their group was a way to give Google a signal of freshness and hence, boost the chances of getting ranked amidst the search engine results.

- Work Continuously to Improve User Experience: Website owners made efforts to improve the overall usability of the website. They tried to provide easy navigation, easy-to-read content, optimized the page load speed, and much more. The ultimate goal was to reduce the bounce rate of the website.

Page Layout Algorithm

Google received a lot of complaints about the poor user experience due to excessive ads and plenty of them being displayed directly on the topmost section of the page. This is why Google launched the Page Layout algorithm to prevent sites from displaying excessive ads along with helping users get some content on the page load rather than ads.

To enhance the user experience on a website and to help them get rid of the unnecessary ads they see on the website, Google launched the Page Layout algorithm in January 2012.

What was the Page Layout Algorithm?

With the Page Layout algorithm, Google recognized the web pages that didn’t have enough content above-the-fold or had a large number of ads and didn’t rank them going forward. The reason was obvious, the user experience was massively impacted when the users saw only Ads on landing on a website.

It took a long time for several websites to recover from this update. While some website owners found this update harsh, the Page Layout algorithm was a need of the hour as users were simply frustrated seeing the ads more than the content.

Recovery from the Page Layout Algorithm

So, how did websites recover from this algorithm impact? Yes, certain websites did take a long time to recover but the domains that followed the right steps took comparatively less time to get ranked again.

- Removing Ads on the Top: Well, this was the most crucial thing that websites were required to do to recover from this update. Website owners removed all the ads from the top and strategized their placements. It fulfilled the basic condition of this update, which was a massive benefit.

- Writing Engaging Content on the Top: The first impression does matter. Hence, it was necessary for the users to write engaging content on the topmost section of a website. This was the content that users read as soon as they landed on the website, and hence, it played an important role in terms of deciding if the users would move further or return to the search engine without taking any further move or action.

- Using Quality Graphics on the Top: Alongside content, using quality graphics was a simple yet effective way to recover from this update. Graphics always draw the interest of users, and using them at the top pushed them to move further and visit other website pages, which was definitely a massive success for the website.

- Special Emphasis on Mobile: Due to excessive Ads on top, user experience in mobile devices degraded comparatively more. Hence, placing ads correctly & in a way that users didn’t have to scroll down much to read the content was necessary.

Numerous websites didn’t like the Page Layout algorithm much. Reason? It was obvious that they felt their revenue might subside if they didn’t show Ads on top. However, placing the Ads strategically on a website did both, maintain their revenue and provide a better user experience to the visitors. They eventually understood it, and things started falling into place after a while.

Venice

The Venice Google algorithm update launched in February 2012 was a significant update in terms of delivering personalized results to users. The primary motive of this update was to deliver the users with the results based on location. The Venice update was a significant step when the user-experience aspect was taken into consideration.

Yes, it might seem so, but Google has never specified that the update was named after a city. Hence, we absolutely have no idea why the name Venice.

What was the Venice Update?

Google continuously worked towards improving the user experience of the searchers and the Venice update was one step towards it. With this update, the algorithm detected the location of the searcher and displayed results accordingly. Plus, the accuracy of the search results when the user made a search with the city was improved.

Recovery from the Venice Update

Let’s check out some factors that were considered with the Venice Google algorithm update.

- Searcher’s Locale: The location was a primary focus point of this update. This update focused on delivering more locally relevant content by making sure that users see results based on the location nearer to them.

- Google My Business Profile: The Google My Business profile was another important aspect considered in terms of displaying results. After this update, the update integrated the information business listing to deliver local businesses in search engine results.

- Relevance of the Content: Websites that have location-specific content were ranked for the specific keywords. For instance, if the website incorporated content like “best restaurants in New York”, and the user made a search with “top restaurants in New York”, it still displayed the specific website. Ultimately, Google’s goal here was to deliver the best results to the searchers even when the nearly exact search for a specific keyword was made.

Recovery from the Venice Update

Well, recovery from the Venice update wasn’t that difficult, as the update’s primary focus was to improve user experience & not to penalize the websites by any means. However, some steps that website owners took to cope with this update are listed below.

- Trying to Incorporate Location, If Relevant: As mentioned, the primary goal of this update was to deliver users the results based on location. Hence, the website owners used the location as much as they could so as to get their websites ranked for the relevant searches made.

- Improving Google My Business Profile: The importance of Google My Business profile enhanced considerably after the Venice update. From including locations & appropriate keywords in GMBs to marketing them correctly, website owners started taking GMB profiles seriously.

- Generating Local Citations: Website owners acquired citations & listed their domains on reputed directories. Here, the NAP (name, address & phone number) concept came into the picture and local citations actually boosted the domain authority of a website considerably.

- Enhanced Focus on Local SEO: Before Venice, the primary goal of the website owners was to win the international SEO race. However, after this Google algorithm update, they started strategizing separately for local SEO, which meant an equal focus on both, the international & local markets.

It was after the Venice update that local SEO was truly recognized and website owners started taking this factor seriously. Plus, the results that Google noticed were absolutely fruitful as the users started seeing results based on their locale.

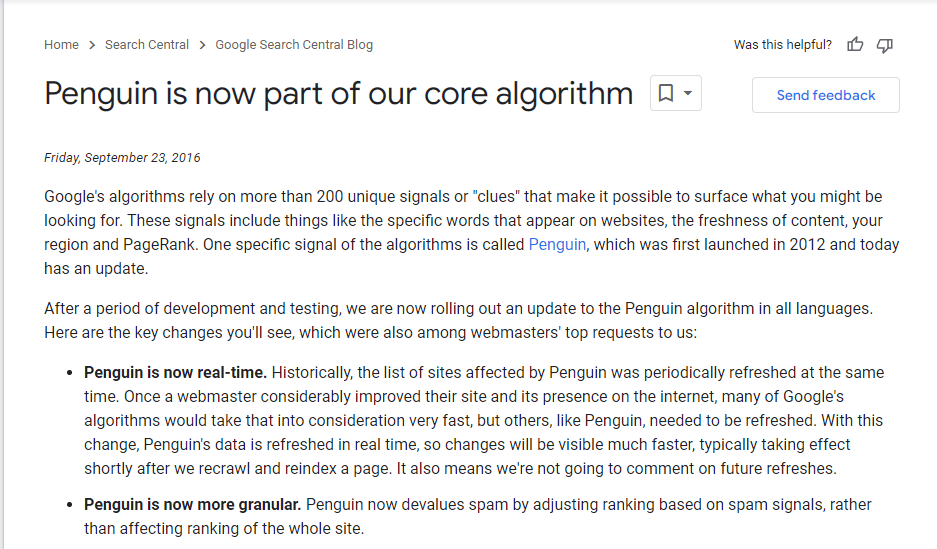

Penguin

A decade before, the Penguin algorithm update was launched, and it was indeed one of the biggest Google algorithm updates. It was named “Webspam Algorithm Update” by Google. This update was launched by Google to reduce the number of sites appearing in SERPs that used black hat SEO tactics to get ranked in the search engine results.

What was the Penguin Update?

The Penguin update was launched to reward high-quality websites with respect to website rankings and degrade the rankings that used black-hat SEO tactics to get ranked. There were a number of websites using manipulative links & followed practices like keyword stuffing, which were penalized with regard to the Penguin update.

This update impacted around 3% of the search queries initially. It was the year 2017 when Penguin was made a part of Google’s core algorithm update.

Recovery from the Penguin Update

- Removing Unnatural Links: This was a must after the launch of the Penguin update. Website owners removed all sorts of unnatural links from the website to cope with this update. It also meant removing links that were redirecting users to 3rd parties, to some extent.

- Keyword Optimization: Using keywords naturally was a prerequisite to succeed. Hence, websites that used unnatural keywords or say followed the practice of keyword stuffing removed such keywords and made sure that the keyword usage felt natural. Keyword optimization was a reliable and quite crucial step to recover from the Penguin update.

Payday Loan Update

It was in 2013 that the Payday Loan update made everyone talk about itself. This update was quite significant, and it impacted around 0.3% of total search queries. The update was primarily focused on reducing spammy queries & spammy sites.

What was the Payday Loan Update?

The Payday Loan update is an influential algorithmic change that was introduced by Google to reduce spammy queries & spammy sites. It primarily targeted websites that followed the keyword stuffing practice, manipulative & spammy backlinks and sites that lacked credible & authentic content.

This update was Google’s efforts to provide the users with accurate & trustworthy results. Plus, the search engine targeted to redirect the audience to relevant sources & not to the sources that were non-contextual.

Recovery from Payday Loan Update

- Getting Rid of Keyword Stuffing: This update forced websites to get rid of keyword stuffing and make sure that keywords are used only naturally on a website.

- Improving the Content Quality: Websites that had low-quality content were simply going nowhere. Hence, it was necessary for them to make sure that the content quality was improved and some value was offered to the users.

- Boosting Domain Authority: Focusing on the DA factor gave the websites an edge, as it gave Google positive signals about a website. Hence, the website owners started focusing on providing top-notch user experience along with reducing spam as much as possible.

Hummingbird Update

Introduced in September 2013, Google defined the Hummingbird update as the biggest algorithm update since 2001.

Several Googlers defined it as the total rewrite of the core algorithm, however, the effects noticed were subtle. This update changed the SEO landscape completely and took Google search to a whole new advanced level.

What was the Hummingbird Update?

The Hummingbird update interpreted natural language queries and context to deliver much more accurate results and meet the search intent considerably. As mentioned, this update was the rewrite of the core algorithm, and it was the biggest Google algorithm update since 2001.

The primary goal of this update was to precisely deliver the search results to the searchers and make sure that the search intent is met as much as possible.

Before this update, Google emphasized keyword density and displayed results accordingly for the searched keywords. Now, after the update, Google started understanding the context of the keywords, and what searchers mean with the keyword and displayed the results accordingly.

Recovery from the Hummingbird Update

To cope with the Hummingbird update, the website owners took the following actions.

- Trying to Meet Search Intent: This was a crucial step to cope with the update. Incorporating questions in the content and answering them is a good way to meet the search intent and satisfy the Google algorithm after the Hummingbird update.

- Incorporating Long-Tail Keywords: Websites that solely focused on keywords were largely impacted by this update. After Hummingbird, they started focusing on long-tail keywords and tried to use them efficiently in the content.

- Being Right with the Content Tone: It was necessary that the correct tone was maintained, and the content answered the usual questions that searchers search for. Hence, some websites did play with the content tone, changed it a bit and tried to make sure that the search intent was met.

Image Source: Google

Pigeon Update

The Google Pigeon update changed the way local businesses ranked in organic search. Pigeon update meant a better search experience for the users. Also, better local search results meant a benefit for the local businesses in terms of displaying their business in the search results.

What was the Pigeon Update?

Pigeon update primarily focused on boosting the local search capabilities. It enhanced the Google search to a considerable extent. Also, Google Maps were worked upon. Hence, the location parameters were also improved, which allowed the search engine to deliver precise & better results.

There were some glitches with the previous Hummingbird update which were resolved with this update. Let’s understand this update with an example. For instance, I searched for something like “the best coffee shop in New York”. Now, after the Pigeon update, Google displayed the results that were in New York & that were near to me. Hence, the results that were in New York, yet far away, were given less priority, which wasn’t the case pre-Pigeon.

Recovery from the Pigeon Update

- GMB Optimization: GMB has always been a crucial tool for local businesses. After the Pigeon update, it became necessary for businesses to optimize their GMBs correctly and make sure that the correct keywords are used there.

- Acquiring Local Backlinks: Websites started focusing on acquiring local backlinks from local websites, blogs, etc. It helped websites to boost their domain authority and overall credibility.

- Focus on the Local Content: Businesses started focusing on local content by using local keywords in the content. Also, they started engaging in the local communities, which meant better visibility in the local market.

- Listing Business in the Local Directories: Businesses started registering their business in authorized local directories like Yelp, Yellow Pages, etc. It ensured better visibility of the businesses locally, which was again the desired result.

Mobilegeddon Update

Google was done listening to the complaints about the poor user experience on mobile. This is the reason it launched the Mobilegeddon update in April 2015. This update brought changes to the mobile search engine results and rankings.

What was the Mobilegeddon Update?

As mentioned, the Mobilegeddon Google algorithm update primarily focused on the mobile-friendliness nature of a website. The sites that were mobile-friendly were rewarded in mobile search engine rankings, while the sites that weren’t were penalized.

Google clearly stated that there’s absolutely no grey area. Your site is either mobile-friendly or isn’t. Also, the change impacted search results in all languages, and it only applied to the individual pages and not the website as a whole.

Eventually, the sites that weren’t mobile-friendly, like the sites that had small texts, difficult navigation, unplayable videos, had ads on the top and forced users to scroll down a lot to see the content, etc. were penalized in terms of rankings. It was Google’s efforts to encourage websites to follow a mobile-friendly design of the website and provide top-notch user experience to the visitors.

Recovery from the Mobilegeddon Update

Recovery from this update took some effort from the website owners, but by no means it was difficult. Let’s check out the recovery steps from this update below.

- Ensuring Responsiveness of the Mobile Design: Websites were adjusted dynamically and made sure that they followed correct behaviour at all breakpoints. The site was specifically optimized for mobile devices and the user experience was enhanced for the visitors landing on the website from mobile.

- Improving Page Load Speed on Mobile: Before this update, there were plenty of websites that took time to load entirely, which meant a poor overall experience. This update forced website owners to work on the page load speed and increase the same on mobile devices.

- Working on Texts & Fonts: It might not feel so, but there were several texts & fonts that weren’t displayed correctly on mobile devices. Mobilegeddon update forced the website owners to change such texts & fonts so that the content is displayed correctly on the mobile.

- Boosting Local SEO of a Website: Most mobile searches made had a local search intent. Hence, improving the local SEO of a website was one of the few ways to recover from this update.

RankBrain Update

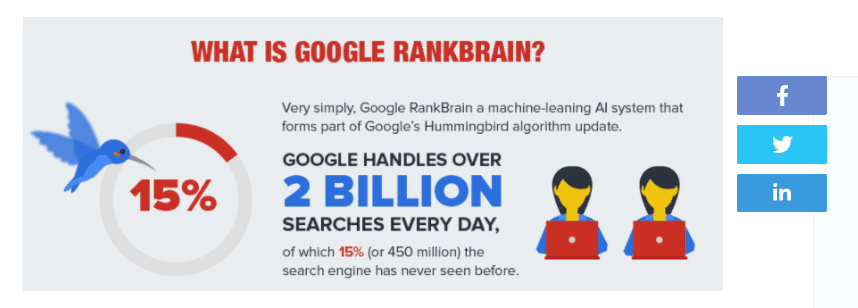

RankBrain Google algorithm update revolutionized the way search engine results were displayed. It was a part of Google’s Hummingbird algorithm, or say an enhancement to it. This update was meant to deliver the users with the best matching results to the user’s search query.

What was the RankBrain Update?

RankBrain update is considered the third-most crucial signal that contributed to the search query. It is a machine learning AI that helps the search engine understand the search query and display the results accordingly.

An example of this update? Ever seen the “People also search for” section when you make a specific search? Well, that’s all a part of the RankBrain Google algorithm update.

This update has just made Google a bit more smart. For instance, if you search “Joe Biden Birthday”, you will also be displayed with the results like “Donald Trump”, “Jill Biden”, etc.

Today, the voice-based search query is common and RankBrain helps considerably with it. It helps Google understand the next possible queries and display results accordingly. For instance, I search for “Eiffel Tower”. The next query might be “Eiffel Tower height”, then “The tallest tower in the world”. RankBrain simply predicts the search queries, conveys them to the search engine, and then the results are displayed accordingly.

Recovery from the RankBrain Update

Check out the recovery steps that the website owners followed to cope with this update below.

- Understanding the Search Intent & Preparing Content Accordingly: Website owners analyzed the user intent behind the queries made and modified the content accordingly. They tried answering commonly asked questions related to the primary keywords in their content to meet the search intent as much as possible.

- Proper Use of Long-Tail Keywords: Long-tail keywords became much more important after the RankBrain update. Website owners did a deep research of important long-tail keywords and incorporated the same in their content.

- Acquiring Quality Backlinks: How backlinks helped here? Well, when any domain referred a website stating that it answers a specific question, it gave a massive green flag to the search engine regarding the fact that the website meets the search intent. Hence, such websites were favoured in terms of rankings.

Image Source: https://neilpatel.com/

Fred Update

Fred Update primarily targeted the websites whose only motive was to earn revenue by displaying excessive ads. Numerous content sites provided thin content & excessive ads to monetize the site. These sites by no means solved the user’s problem or delivered any value. They just focused on generating revenue.

What was the Fred Update?

Google Fred is an algorithm that targets sites with low-value content and sites with aggressive monetization, even without delivering any value to the users. You might have visited several sites that had less content but excessive ads & less content and weren’t really useful. Well, we term them dummy sites, which were targeted & penalized by Google’s Fred algorithm update.

After the introduction of this update, dummy sites noticed around a 50-90% drop in website traffic, which was a massive dip for them.

Fred’s algorithm update was one of the few updates that imparted an immediate impact on the websites. Sites struggled for several months to regain the lost traffic.

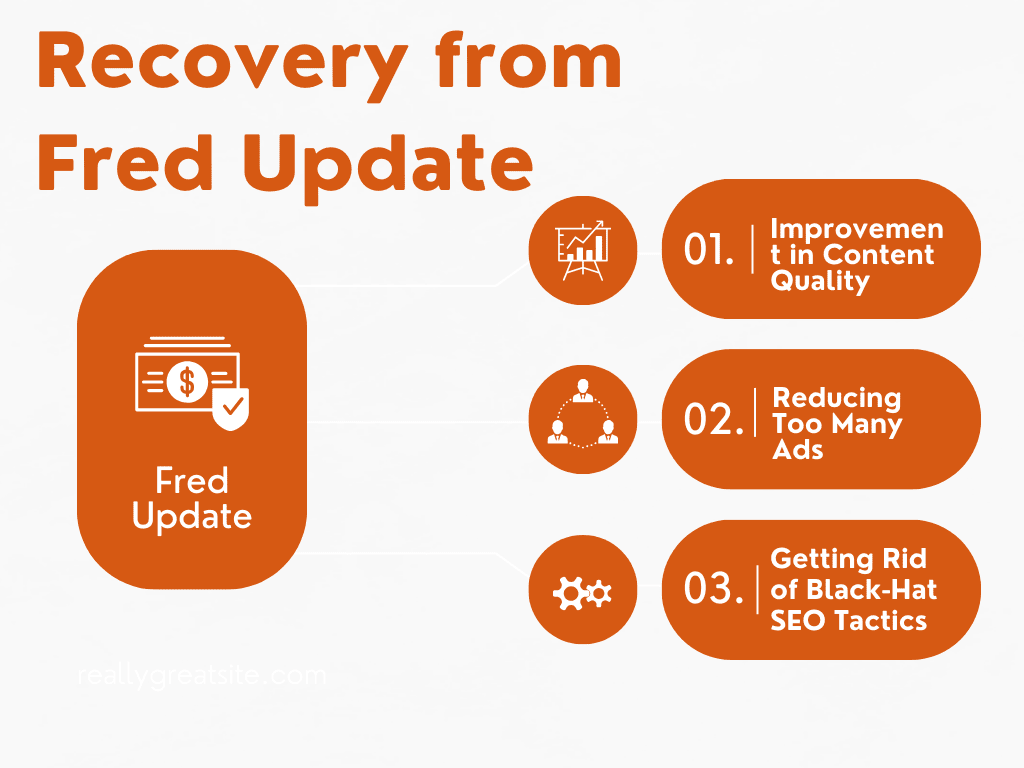

Recovery from Fred Update

As already mentioned, the recovery from the Fred update was never easy. Some recovery steps taken by the affected sites to restore their website rankings are:

- Improvement in Content Quality: This was the first and the most important thing that websites were required to follow to cope with this update. The sites with thin content were specifically targeted, and hence, sites with low-quality content were required to work on the same and improve the overall content quality.

- Reducing Too Many Ads: Excessive interference of the ads with content was another aspect that was considered by this update. Hence, reducing the excessive ads and optimizing the ad’s position was another way to recover from this update. Proper optimization of the ads ensured both, a smooth user experience & yielding the desired results in terms of monetization.

- Getting Rid of Black-Hat SEO Tactics: Google has always been strict with the sites following black-hat SEO tactics, and the norms were a lot stricter after Fred algorithm update. Hence, the websites were forced to get rid of all sorts of black-hat SEO tactics and make sure that they followed only an organic approach to get the websites ranked.

Frequently Asked Questions

How does the Google algorithm work?

Well, no one other than Google itself knows that. We as SEO professionals just know the factors that the algorithm considers getting a website ranked, but how all the backend flow goes, other than crawling and indexing is not yet exactly known & even revealed by Google.

How often does Google update its algorithm?

Well, the number is never fixed. Google continually works on reducing spam and hence, it keeps on introducing minor & major tweaks to all the updates.

How do I know if my website is affected by Google algorithm update?

If you notice the degradation in website rankings for a while after the algorithm is updated, you might be impacted by the update. However, do wait for some time before taking action as after the update numerous websites see degradation in their rankings temporarily due to the term “Google Dance”. If that’s the case with you, it will all fall into place after a while, and if it doesn’t, you might be required to take action.

How do I ensure that my website never gets affected due to Google algorithm updates?

Write amazing & value-delivering content on your website. Follow the right link-building strategy to acquire organic backlinks. Make sure to not follow any spammy tactics to get your website ranked. Stay away from concepts like keyword stuffing, bad backlinking, & excessive ads. Focus on providing relevant value to the users landing on your site. The more you are right & organic with your traffic acquiring & monetization strategies, the easier it will be for your website to stay away from Google algorithm updates.

How do I keep track of Google algorithm updates?

It’s never a difficult task. Just follow authentic SEO blogs to stay updated with the latest algorithm updates. Also, keep an eye on the announcements from Google. Make it a practice of checking out Google’s announcements & blogs regularly.

Final Words

Well, it was long, wasn’t it? But, with the number of algorithm updates introduced by Google and with the impact they left behind, it was absolutely important for us to give detailed information about the same.

We have listed all the major Google algorithm updates that you must know along with providing you with every crucial information regarding the same. As mentioned, Google works continuously on keeping the spam away, hence, introducing algorithm updates regularly. We will keep on updating our article with the launch of every Google algorithm update to help you out with every required information about it.

If you still want more information about any of the algorithm updates listed above, or if you are looking for professional help to cope with any of the Google algorithm updates, you need not look any further than us. Contact us now to safeguard your website against the strict Google algorithms.

Check Our latest Blog on contextual link Building and sky rocket your website traffic!