Crawlerlist: Optimizing Web Crawlers for Maximum Website Performance

In the ever-evolving digital world, web crawlers play a pivotal role in helping search engines discover and index content. Whether you’re a website owner, SEO expert, or a digital marketer, knowing how to optimize your site for a crawlerlist is crucial.

In this comprehensive guide, we’ll dive deep into the concept of a crawlerlist, its importance, how web crawlers function, and the best practices for leveraging crawlers for maximum website visibility. By the end of this article, you’ll have a better understanding of what a crawlerlist is and how it can influence your website’s ranking on search engines.

What is a Crawlerlist?

What is a Crawlerlist?

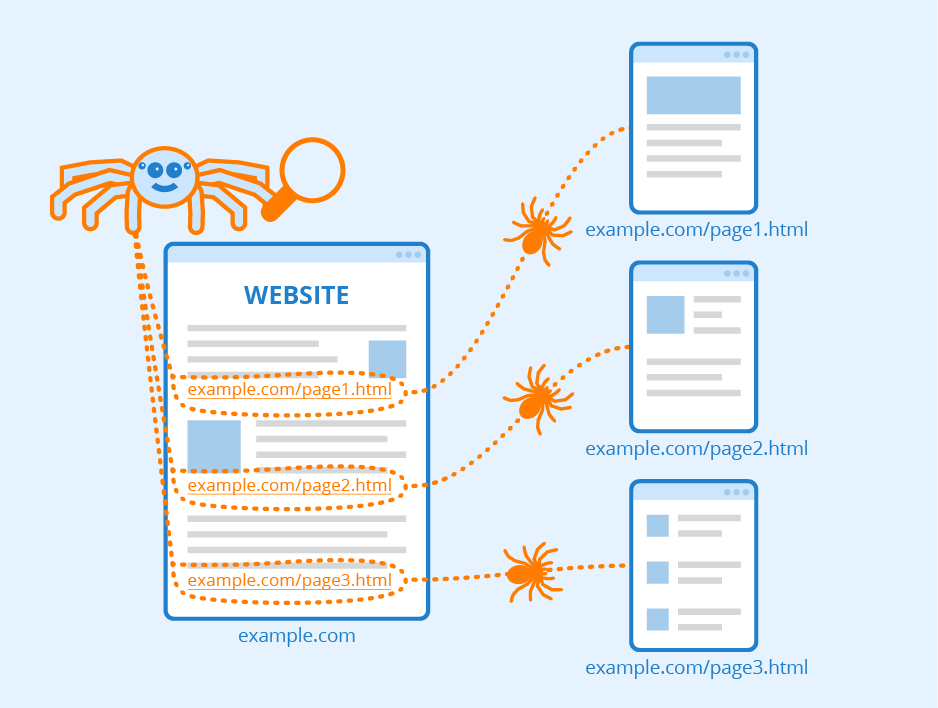

A crawlerlist is essentially a list of URLs that web crawlers or spiders, such as Google’s Googlebot, Bingbot, or Yahoo Slurp, scan for content. These crawlers work by following links from one page to another, indexing the content they find along the way. The URLs found on a crawlerlist are queued up and explored by the crawler, ensuring that all the content on a website gets reviewed.

A well-optimized crawlerlist can have a major impact on how efficiently a search engine indexes your site, which directly influences your website’s ranking and visibility on search results pages. If a web page is not included on the crawlerlist, it may be overlooked by search engines, making it nearly impossible for users to find that content. Therefore, ensuring that all essential pages are part of your crawlerlist is key to effective SEO.

How Web Crawlers Use a Crawlerlist

How Web Crawlers Use a Crawlerlist

Web crawlers systematically navigate through the pages on the crawlerlist, gathering information to send back to the search engine for indexing. These crawlers analyze each page for content relevance, keyword density, internal links, and metadata. The information they gather is used to determine how relevant a page is to particular search queries.

Crawlers don’t just stop at collecting content, however; they also analyze the overall site structure and discover any dead links, duplicate content, or errors. A clean, well-structured crawlerlist ensures that the entire site is indexed accurately and efficiently.

Importance of Crawlerlist in SEO

Importance of Crawlerlist in SEO

Understanding the crawlerlist is vital in today’s competitive SEO landscape. A comprehensive and optimized crawlerlist can ensure that all of your site’s valuable pages get indexed by search engines.

If certain pages are not part of the crawlerlist, they might be ignored by web crawlers, resulting in those pages not showing up in search results. By making sure your crawlerlist includes all high-priority URLs, you can help search engines better understand the value and relevance of your website.

This results in improved rankings and greater online visibility. Additionally, a well-organized crawlerlist reduces the chances of errors in indexing and ensures that crawlers don’t waste their crawl budget on irrelevant or low-priority pages.

Key Components of a Crawlerlist

Key Components of a Crawlerlist

A crawlerlist includes the URLs of important pages on your website. Typically, these URLs are structured in a way that follows the site’s hierarchy, ensuring that crawlers can quickly navigate through main pages, subpages, and posts. However, an efficient crawlerlist doesn’t include all pages on your site, just the most relevant ones.

For example, pages with outdated content, admin pages, or duplicate pages should be excluded from the crawler list. Similarly, it’s important to ensure that non-canonical URLs (duplicate content with different URLs) do not appear on the crawler list, as these could dilute the SEO value of your site.

A strong crawler list should be free of redundant or low-value pages that could slow down the indexing process.

Best Practices for Optimizing a Crawlerlist

Best Practices for Optimizing a Crawlerlist

Optimizing your crawlerlist can make a huge difference when it comes to search engine performance. There are several best practices that website owners and SEO professionals should follow to ensure that their crawlerlist is efficient, relevant, and crawl-friendly. First, make sure that only relevant and important URLs are included on the crawler list.

Pages that don’t offer significant SEO value should be excluded to conserve the crawl budget and direct crawlers to higher-priority content. Second, keep your crawlerlist clean by regularly auditing it for outdated, irrelevant, or broken links. A crawlerlist full of dead or irrelevant links can waste a crawler’s time, potentially leading to missed opportunities to index valuable content.

Another important best practice is to ensure that your crawler list includes all canonical URLs. Canonical URLs prevent duplicate content issues by guiding crawlers to the main version of a page.

Lastly, leverage internal linking to help crawlers navigate through your site efficiently. Internal links signal to crawlers which pages are most important, ensuring that they are explored and indexed properly.

Common Mistakes in Crawlerlist Optimization

Common Mistakes in Crawlerlist Optimization

There are several common mistakes that can undermine the effectiveness of your crawlerlist. One of the most frequent issues is overloading the crawlerlist with too many URLs. Crawlers have a limited budget, meaning they can only crawl a certain number of pages before moving on.

If your crawlerlist contains too many low-value or irrelevant pages, important pages might be overlooked, and the overall efficiency of the crawl will suffer. Another mistake is failing to update the crawler list regularly. Websites are dynamic and change over time, so the crawler list should be updated to reflect new content or remove outdated pages.

Additionally, not handling redirects properly is another common pitfall. A well-optimized crawlerlist should account for any changes in URLs, ensuring that crawlers are pointed to the correct pages via 301 redirects.

How to Create and Manage a Crawlerlist

Creating and managing a crawlerlist may seem daunting, but with the right tools and strategies, it can be done efficiently. Start by using tools like Google Search Console, Screaming Frog, or Ahrefs to identify which pages are currently being crawled and indexed.

These tools can help you assess whether any high-priority pages are missing from the crawler list or whether low-value pages are being crawled unnecessarily. Once you’ve identified which pages should be included, you can use a robots.txt file or a sitemap to guide crawlers to the most important pages. A robots.txt file can help by explicitly telling crawlers which pages to skip or ignore, conserving the crawl budget for higher-priority pages.

Similarly, submitting a sitemap to Google ensures that all essential pages are listed in one place, making it easier for crawlers to find and index them. Finally, ensure that the crawler list is updated frequently, especially when new content is added or when major changes are made to the site structure. Regular audits will keep the crawler list lean and efficient.

The Role of Sitemaps and Robots.txt in Crawlerlist Optimization

Sitemaps and robots.txt files are two critical tools for optimizing your crawler list. A sitemap is an XML file that lists all of the important URLs on your site, acting as a guide for web crawlers. It’s particularly useful for larger sites with thousands of pages because it ensures that crawlers are directed to high-priority content. On the other hand, a robots.txt file tells crawlers which pages to avoid.

This file is useful for preventing crawlers from wasting resources on low-value pages such as duplicate content, shopping cart pages, or user-specific content. By using both tools in tandem, you can ensure that your crawler list is highly optimized, leading to better crawling, faster indexing, and improved rankings.

Take the first step toward dominating search engines with our Fully Managed SEO Services. Plus, get personalized insights with a free SEO consultation. Visit Fully Managed SEO Services for tailored solutions, and book your consultation at Free SEO Consultation.

Conclusion: Maximizing Website Performance with an Optimized Crawlerlist

A well-optimized crawlerlist is the foundation of good SEO. It ensures that search engine crawlers can efficiently navigate and index all the essential pages on your website, ultimately improving your site’s visibility and ranking.

By following best practices, avoiding common mistakes, and leveraging tools like sitemaps and robots.txt files, you can optimize your crawler list to drive better results. Remember, a clean and efficient crawler list is not just beneficial for search engines but for your overall digital strategy.

So, keep auditing and refining your crawler list to ensure your website stays relevant in search results.

WhatsApp Now

WhatsApp Now

+(91) 8700778618

+(91) 8700778618